What Are the Foundational Concepts of Visual Effects (VFX)?

Visual effects (VFX) have the power to blur the line between imagination and reality. Behind every seamless illusion is a rich foundation of creative and technical mastery that often goes unseen. They shape the way we see and feel stories on the screen. Understanding the foundations allows innovation, where the real and the imagined cannot be distinguished.

Table of Contents

VFX serves as the silent architect, building emotions, tension, awe, and immersion frame by frame. It allows an idea to evolve into a moment of cinematic truth and to impress and move the viewers in the flow of emotions.

The foundational concepts of visual effects are technical and deeply creative. Behind every mind-blowing effect is a complex blend of technology, creativity, and problem-solving. Understanding these foundational concepts will help you evolve a single idea into something extraordinary.

In this blog, we explore the essential pillars of VFX, the processes, tools, and real-world applications. Whether you’re a filmmaker, an aspiring VFX artist, or just a curious mind, this breakdown will give you fresh insight into how digital magic is made.

6 Foundational Concepts of Visual Effects (VFX)

1. Compositing: Building the Invisible Canvas

Compositing is the art of combining multiple visual elements from different sources into a single image. You can think of it as a collage to form a cohesive scene, but with a level of realism that can make spaceships look like they’re truly hovering over a city skyline.

Tools like Adobe After Effects, Fusion & Nuke are popular for this technique.

Example:

In Avengers: Endgame, the time heist scenes featured characters walking through digitally recreated environments from past Marvel films. These environments were made using a combination of practical footage and digitally composited elements. [Source: Marvel VFX Breakdown by Framestore]

Why does it matter?

Compositing allows filmmakers to create visually rich environments without the cost and logistical nightmares of filming on location or the danger of blowing up real buildings.

2. Motion Tracking: Marrying Movement and Magic

Motion Tracking involves tracking movement of objects in a video to add new elements in sync with the original footage. It’s essential for integrating Computer Generated Imagery (CGI) elements into real-world scenes, to ensure they move naturally.

You can use tools like PFTrack, Mocha and 3DEqualizer are used for a perfect blending of artificial images with reality.

Example:

In District 9, director Neill Blomkamp’s team used motion tracking to place alien characters into gritty documentary-style footage. The aliens walked, ran, and interacted seamlessly with the environment thanks to precise motion tracking. [Source: image engine]

Why does it matter?

Without motion tracking, CGI elements would look like they’re floating or disconnected from the live-action world.

3. Rotoscoping: The Art of Digital Isolation

Rotoscoping is the painstaking process of tracing over live-action footage, frame by frame to isolate objects, characters, or elements for further manipulation or compositing. While AI-assisted rotoscoping tools still exist today, manual rotoscoping is still widely used for precision work. It’s time-consuming but crucial for achieving high-quality VFX.

Tools like Adobe After Effects, Nuke, Silhouette, Mocha Pro, and Fusion are popular for rotoscoping.

Example:

In Life of Pi, extensive rotoscoping was used to separate the boy and the boat from the real ocean to later composite in the digitally created tiger and storm effects. [Source: VFX Breakdown by fxguide]

Why does it matter?

Rotoscoping enables scene alterations, background replacements, and character enhancements without reshooting. It saves time, money, and gives you a creative flexibility.

4. Matte Painting: Crafting Worlds That Don’t Exist

Matte painting is the creation of detailed environments, mountains, cities and alien planets that would be impossible or impractical to film. These are often still images or lightly animated, which can be integrated into live-action footage. Originally it was done by hand, with oil on glass but now it is created digitally, for stunning and photorealistic environments.

Common tools for matte painting include Adobe Photoshop, Krita, Corel Painter, Nuke, and Blender.

Example:

In “The Mandalorian”, the digital matte painting was combined with LED stage technology (The Volume), allowed for real-time rendering of alien landscapes. [Source: ILM]

Why does it matter?

Matte painting allows stories to take place anywhere, be it 1920s Paris or a far-off galaxy, without the need for massive sets or location shoots.

5. Particle Effects: Simulating the Chaos of Nature

Particle effects involve the simulation of tiny elements that behave like fluids or gases like smoke, dust, fire, sparks, rain, magic spells, and explosions. These are created using particle systems and physics simulations to create dynamic and realistic effects that add depth & excitement to the scenes.

Software like Houdini is the industry gold standard for such effects.

Example:

In Inception, the dream sequence collapses with flying debris, zero-gravity dust, and exploding buildings. It is all achieved through particle simulations. [Source: digital media world]

Why does it matter?

Particle effects inject realism and spectacle into a scene, providing tactile responses to the fantastical. You can create dragon fire that burns the air or sandstorms that whip across a battlefield using it.

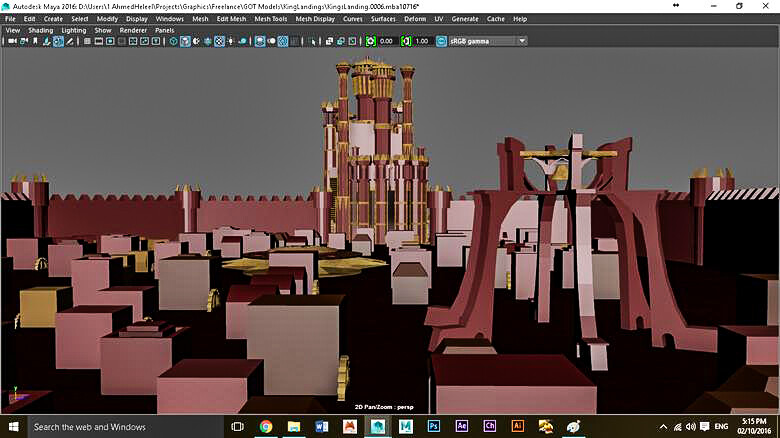

6. 3D Modeling & Animation: Sculpting the Impossible

3D modeling is the process of creating three-dimensional (3D) objects like characters, vehicles, landscapes, digitally. Once modeled, these objects can be animated & integrated into live-action footage.

Software like Blender, Autodesk Maya, ZBrush & Cinema 4D are commonly used to create anything from realistic characters to fictional creatures.

Example:

Game of Thrones brought dragons to life through 3D modeling. They animated their flight patterns, facial expressions, and combat interactions and combined them into real-world footage. [Source: animation mentor, ART of VFX, VFXVoice, WIRED]

Why does it matter?

3D modeling expands the range of storytelling. It lets filmmakers populate worlds with creatures and architecture that simply don’t exist or are too expensive to build.

Bonus: The Role of AI in Modern VFX

In 2025, the line between VFX and AI-generated visuals is thinner now. Tools like Runway ML, Wonder Studio, and Adobe Firefly are accelerating rotoscoping, object tracking, and even facial animation using machine learning.

Example:

In “Everything Everywhere All At Once”, a small team used AI-assisted tools to manage complex multiverse sequences with fewer resources. It demonstrated how even indie films can now rival blockbuster effects. [Video: A24, screenrant]

Why does it matter?

AI is democratizing visual effects, making high-quality VFX accessible to creators of all levels, while also pushing the boundaries of what’s possible.

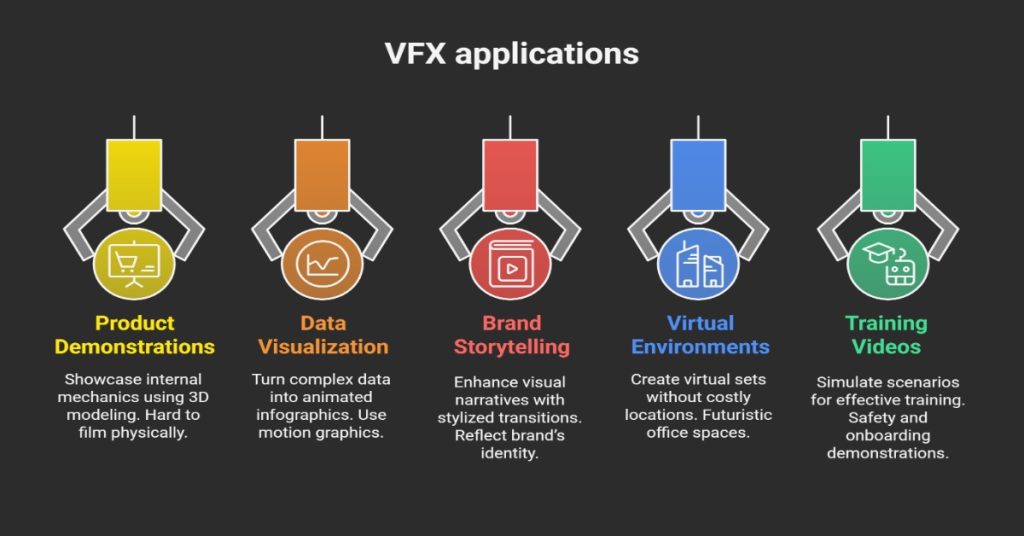

How and When Can You Incorporate VFX in Corporate Videos?

Today, businesses across industries are leveraging visual effects to create more engaging, professional, and visually dynamic content that effectively communicates their brand story, services, or innovations.

You can integrate VFX into your corporate video at various stages depending on your goals:

- Product Demonstrations: Use 3D modeling and animation to showcase internal mechanics or features of a product that are hard to film physically.

- Data Visualization: Turn complex data and metrics into compelling animated infographics using motion graphics.

- Brand Storytelling: Enhance visual narratives with stylized transitions, environment changes, or effects that reflect your brand’s identity.

- Virtual Environments: Create virtual sets or futuristic office spaces without the need for costly locations or set designs.

- Training or Explainer Videos: Use VFX to simulate scenarios or environments for effective training, safety demonstrations, or onboarding.

Example:

One standout example of VFX in corporate branding comes from Kia’s Sportage campaign titled “Inspires Attention.” In this visually striking video, a single firefly comes to life and curiously approaches the vehicle. As the narrative unfolds, hundreds of fireflies join in, creating a magical, cinematic moment that highlights the allure of the car.

This scene was brought to life using advanced character animation, particle simulations, and compositing techniques, showcasing how VFX can be used to tell an emotional story rather than just demonstrate features. By combining storytelling with stunning visuals, Kia elevated a typical car ad into an engaging visual experience. This proves how VFX can transform corporate videos into memorable brand statements. [Source: GlassworksVFX, MediaCat]

Conclusion: Why Understanding VFX Matters?

Visual effects are tools that shape how we feel, interpret, and remember stories. Every explosion, every creature, every surreal transformation is grounded in deeply structured workflows and techniques. They can simulate reality and amplify imagination till no limit.

Understanding the foundational concepts of visual effects gives creators the tools to replicate what’s been done and to innovate and push boundaries. The next great cinematic moment might not come from a studio with a billion-dollar budget, but from an artist who understands how to mix compositing with storytelling, or particle simulations with raw emotion.

Read More

Video Editing Tool: Adobe Premiere Pro & Its Latest Beta Version

Lighting Techniques for Professional Corporate Videos: The Key to Visual Authority

How Can You Use VFX in Corporate Videos to Engage Viewers?

Frequently Asked Questions (FAQs)

Q1- What are fundamentals of VFX?

The fundamentals of VFX (Visual Effects) include a combination of artistic and technical elements used to create imagery that cannot be captured in real-life. Key concepts include compositing, 3D modeling, animation, lighting, rendering, and simulation. Understanding camera tracking, green screen techniques, and digital matte painting are also essential.

Q2- What is modeling in VFX?

Modeling in VFX refers to the process of creating 3D representations of objects, characters, or environments. Artists use specialized software to sculpt and define the shape, structure, and surface details of digital assets. These models form the basis for further processes like texturing, rigging, and animation. Good modeling ensures that the object or character looks realistic and performs well in the visual scene.

Q3- Which movie first used VFX?

The first known use of VFX dates back to the silent film era, with “A Trip to the Moon” (1902) by Georges Méliès. Méliès, a magician and filmmaker, used techniques like multiple exposures, time-lapse, and hand-painted frames to create fantastical visuals. Although primitive by today’s standards, his work laid the groundwork for modern VFX in cinema.

Q4- Which software is used for VFX?

Several software tools are widely used in VFX production, depending on the task. Popular choices include Autodesk Maya and Blender for modeling and animation, Adobe After Effects and Nuke for compositing, Houdini for simulations and visual effects, and Cinema 4D for motion graphics. The choice of software often depends on the project needs and the studio’s pipeline.

Q5- What is the new technology in VFX?

One of the most impactful new technologies in VFX is virtual production, especially using LED volume stages (popularized by shows like The Mandalorian). It allows real-time rendering of digital environments on large LED screens during live filming. AI-powered tools and machine learning are also being used for motion capture, rotoscoping, and upscaling footage, speeding up workflows and enhancing quality.